Contract details may differ slightly, but in general, Operators will be charged a fixed amount of credits per model run. The following guidelines are useful for knowing how many runs you’re using.

-

Failed and aborted runs are never billed. Only runs that result in a success are billed.

-

Runs using the Demo dashboard are typically not billed (although we reserve the right to limit the total number of runs in the demo dashboard)

-

If Run Type = “Model run” it will count as 4 credits

-

If Run Type = “Parameter Recovery” it will count as 4 credits

-

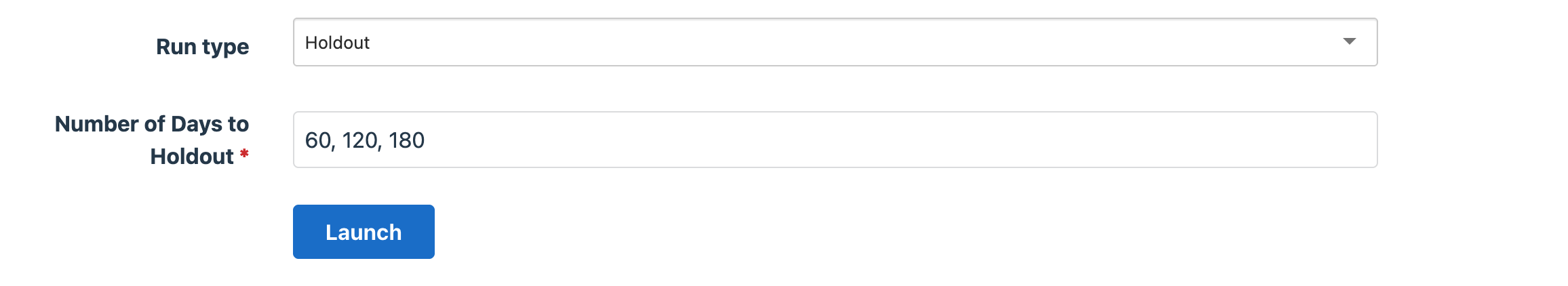

If Run Type = “Holdout” it will count as the number of distinct elements in “Number of Days to Holdout” times 4. For example, the screenshot below would count as 12 credits:

This will launch 3 runs (and cost 12 credits): one with 60 days heldout, one with 120, and one with 180

-

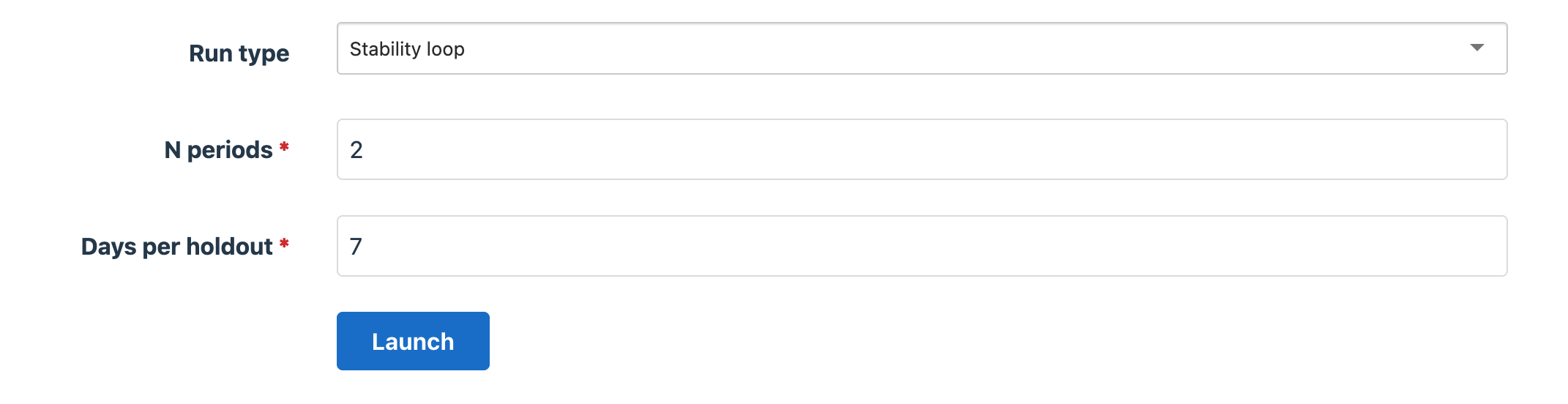

If Run Type = “Stability Loop” it will count as (“N Periods” + 1) * 4. This is because if you set N periods to two, we fit three models. One for two weeks ago, one for last week, and one for this week.

This will be billed as 12 credits

Cost Management Advice

Recast makes it easy to launch and train media mix models. Part of the zen of Recast is that we believe it is generally better to pay more for additional computation upfront to validate a model than it is to pay many times that in sweat and tears when a client takes action on a model that isn’t actually correct.

This can be a double-edged weapon since it allows you to efficiently test and verify lots of different candidate models to determine which one performs best, but it also means you can quickly run up considerable compute costs or use up many model run credits unnecessarily.

So, we think it’s important to use your run credits wisely such that you’re getting the most bang for your buck in terms of compute and sensitivity analyses while also not trading off short-term run credit costs for long-term poor model quality. As such, here are some tips and tricks for getting the most out of your Recast credits.

Tip #1: Be careful with backtest and stability-loop runs since these burn up the most credits quickly

Backtests and stability loops are powerful tools for validating a media mix model, but they’re computationally intensive and will eat through a lot of Recast credits very quickly. Be thoughtful when you’re launching these runs to make sure you’re not wasting credits on superfluous or repetitive checks.

Tip #2: At the beginning of a model build or troubleshooting session, parameter recovery and “standard” model runs should be your primary focus

Standard “model runs” and “parameter recovery” exercises use far fewer credits and generally can be used to fix most large problems in your model. You should mostly use these runs at the beginning of the model build to rule out major issues in the model (via the parameter recovery check) as well as to validate that the inferences from the model are “reasonable” and that the 30-day holdout accuracy is acceptable before moving on to the final checks.

Tip #3: Use longer-term backtests or stability loops as a “final-check” for your model

Once you have a model that produces reasonable results and reasonable 30 day backtests, then you can move onto the final “confirmatory” checks of longer backtests and model stability. The defaults that show up in the run launching panel are good, but you can also save on credits by running sparser stability loops (every 14 days instead of every 7) or only focusing on the backtests that you care most about.

Tip #4: Be deliberate in which runs you’re launching

Broadly, the advice is to just be deliberate. With every run you’re launching, think about what question you’re trying to answer and don’t launch unnecessary runs that aren’t answering a specific question.

In stability loops, if you’ve been having issues with low stability between just two runs, limit your stability loops to just those two runs until the issue is fixed. Once it’s fixed, then you can go back and do the “full run” to confirm there were no other regressions.

With holdout forecasts if one particular time period is giving you trouble, it’s totally reasonable to focus just on that time period while you’re iterating so that you aren’t “checking” a bunch of time periods that already seem to be working, or that might change once you fix your core issue.